Isolating sensitive data and operations is a fundamental issue in computing. Ideally, we want to minimize the possibility of a software defect compromising the security of a device. However, in order for the software we write to be useful, it typically needs to interact with that sensitive data in some form or fashion. So how do we interact with sensitive data without being able to access it?

The answer is that we bring only the operations that must access the sensitive data closer to the data, then force all other software to invoke those operations via some sort of interface. These restrictions are implemented in hardware, either in the same processor that the insecure software runs on, or on a physically separate component. When the hardware protections are implemented in the same processor, the secure environment it typically referred to as a Trusted Execution Environment (TEE) or secure enclave. When a separate component, it may be referred to as a Hardware Security Module (HSM), smart card, Secure Element (SE), or, if you’re Apple, a Secure Exclave. The definitions vary depending on who you ask, but most folks will generally refer to large data center scale PCI devices, such as the Marvell LiquidSecurity devices used by Google’s Cloud HSM service, as HSMs. Smaller components, which may be present on the same board as a laptop, phone, or embedded device, are typically referred to as secure elements. These small dedicated components are also sometimes referred to as smart cards, though the prevalence of smart card chips on payment and identification cards has led to more common association with these passive use-cases (e.g. inserted into a reader or brought into proximity with an interrogating device). It all gets quite convoluted, but as this excellent comment from a Stack Overflow user succinctly states:

“In a nutshell, if it hurts when you drop it on your foot, it’s an HSM. If you carry it in your wallet, it’s a smartcard. If it’s a non-removable smartcard, it’s a secure element.”

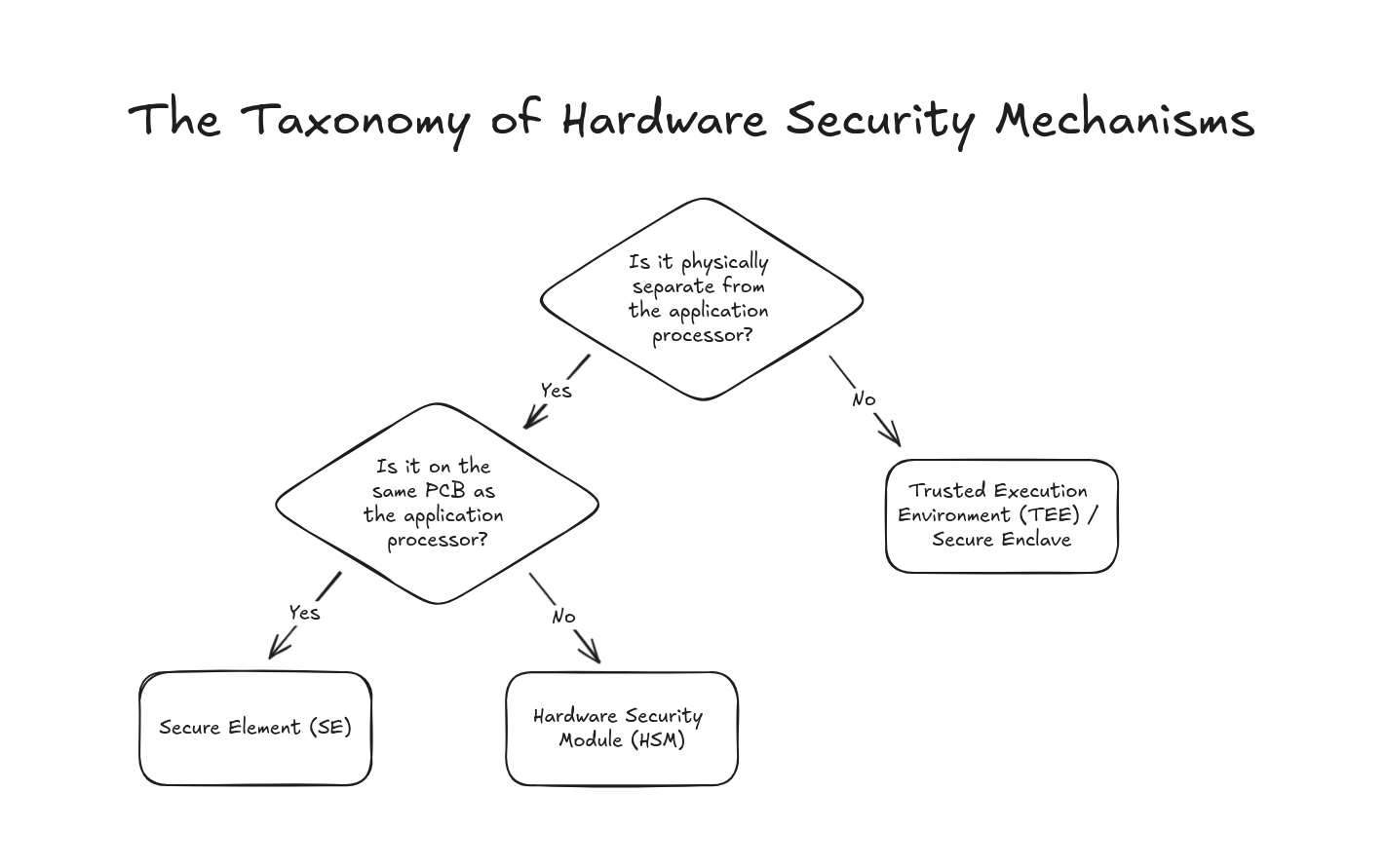

If we remove smart cards from the conversation, my own take on the organization of hardware security mechanisms, which I am certain will be viewed as overly simplistic by many, is as follows.

Two other terms that you’ll hear mentioned in this domain are Trusted Platform Module (TPM) and Root of Trust (RoT). TPM, while sometimes used to refer to a hardware component, is actually a specification, which can be implemented by hardware, software, or some combination of the two. RoT is an even less precise term, with no universal specification, but rather a general definition of the combination of hardware and software that allows for all other components to operate securely. A common example is secure boot, when involves verifying that firmware is signed by a specific private key prior to allowing it to execute.

With all of these security options available, it can be difficult to determine what to use when architecting a secure system. For example, if using an Arm processor that supports TrustZone, which enables separating secure (TEE) and non-secure execution on the same processor, you may choose to leverage it rather than introducing a new component that drives up cost and complexity. However, TEE solutions alone may fail to guard against physical tampering, as well as side-channel attacks. Arm notes in their security documentation some of the risks involved with using only TrustZone for isolation:

“TrustZone technology for Armv8-M processors or the Security Extension in the Armv8-M architecture is designed to provide hardware enforced isolation between software environments. This arrangement protects against most software attacks and covers the security needs for many applications. Side channels are a class of attacks that aim to infer information about the secret program state based on observations arising from the implementation details of a program, rather than on flaws in the protocol or algorithm itself… The Security Extensions for the Armv8-M architecture do not claim to protect against side channel attacks due to control flow or memory access patterns. Indeed, such attacks are not specific to the Armv8-M architecture; they may apply to any code with secret-dependent control flow or memory access patterns.”

If necessary to guard against physical tampering, some processors, such as the Arm Cortex-M35P, offer physical tamper resistance. However, issues like side channel attacks may still be possible when there is not physical isolation between the memory of the secure and non-secure execution environments. For further isolation, and potentially for acceleration of cryptographic operations, a secure element, such as one of the processors in the Microchip CryptoAuthentication family, may be necessary. This isn’t to say that secure elements are impervious to physical access attacks. In fact, multiple processors in the CryptoAuthentication family have been found to be exploitable via Laser Fault Injection (LFI). It is also entirely possible that the firmware on the secure element itself could have a defect.

Microchip’s ATECC608 has become particularly popular in embedded devices due to its low power and wide variety of features. It supports secure storage, Elliptic Curve Diffie-Helman (ECDH), Elliptic Curve Digitial Signature Algorithm (ECDSA), Advanced Encryption Standard (AES), and more. It is also supported by Microchip’s Trust Platform, and a source available SDK, CryptoAuthLib, is provided to integrate the ATECC608 with many microcontrollers and microprocessors. Though not previously mentioned, another benefit of using a secure element is the ability to leverage any secure provisioning support offered by the silicon vendor. Microchip’s Trust Platform provides a number of different solutions, including vendor-managed certificate provisioning and customer-managed certificate provisioning.

One of the reasons that I am fascinated by hardware security mechanisms is because, despite the critical role they play in a system, much of the information about how to use them and how they work is kept behind NDAs and proprietary firmware. This is even more true when it comes to combining multiple hardware security mechanisms together, which is typically required for a sufficient security strategy. Now that we have a common understanding of the types of mechanisms available to us, we can explore them each in greater depth.