In our last post, we explored the Nordic VPR RISC-V processor through the lens of the peripheral processor (PPR) on the nRF54H20. While we demonstrated how the application processor can configure and start a VPR processor, we stopped short of demonstrating any further communication between them. Most meaningful use-cases of the PPR and the FLPR, involve communicating with the controlling processor.

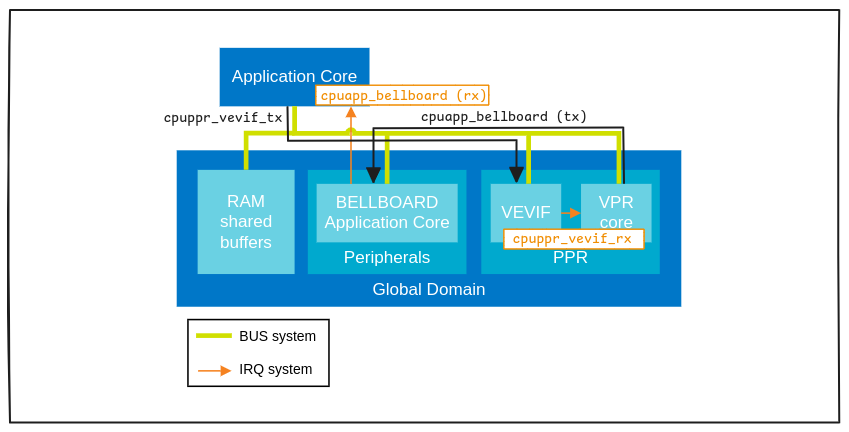

Nordic uses two different hardware peripherals for inter-processor

communication

(IPC)

on the nRF54H20: VEVIF (VPR Event

Interface)

and

BELLBOARD.

The former is used for communication with the VPR RISC-V processors, while the

latter is used for communication with the Arm processors. Zephyr Multi-Channel

Inter-Processor Mailbox

(MBOX)

transmitting and receiving drivers

are

implemented

for

both.

It is easy to get confused about the organization of drivers used by each

processor, but the breakdown in the case of communication between the

application processor (cpuapp) and PPR (cpuppr) on the nRF54H20 is as

follows.

- VEVIF Task TX: used by

cpuappto signal thecpuppr - VEVIF Task RX: used by

cpupprto receive signals from thecpuapp - BELLBOARD TX: used by

cpupprto signal thecpuapp - BELLBOARD RX: used by

cpuappto receive signals from thecpuppr

These drivers provide Nordic’s peripheral interface tasks and events model. Tasks are used to trigger functionality on a peripheral, whereas events are used by a peripheral to notify the CPU or another peripheral about some event occurring. The Distributed Programmable Peripheral Interconnect (DPPI) allows for configuration of channels so that events can be routed between peripherals on a one-to-one, one-to-many, many-to-one, or many-to-many model without a CPU having to be involved at all. Without it, coordinating peripherals would require a peripheral generating an interrupt on a CPU, then that CPU servicing the interrupt by triggering functionality on another peripheral. This not only creates additional overhead, but also occupies the CPU when it may have other operations to perform. However, in the case of inter-processor communication, we are communicating directly between CPUs and want to raise interrupts. Therefore, we avoid using the DPPI and instead communicate tasks directly to the PPR (VEVIF) and the application processor (BELLBOARD) to generate interrupts.

The Zephyr MBOX API supports signalling and data transfer modes. If a peripheral supports data transfer, the MBOX API can be used directly for communicating between processors. The VEVIF and BELLBOARD peripherals do not support data transfer, so we must leverage the Zephyr IPC Service. It abstracts communication by writing to shared memory, then leveraging the MBOX API to signal the other processor to read from it. In order to do so, partitions in shared memory for sending in each direction (if necessary) must be configured.

The same RAM3x region used in the last post for programming the PPR is used for IPC shared memory. This can be observed in the memory map devicetree include file.

cpuppr_ram3x_region: memory@2fc00000 {

compatible = "nordic,owned-memory";

reg = <0x2fc00000 DT_SIZE_K(64)>;

status = "disabled";

nordic,access = <NRF_OWNER_ID_APPLICATION NRF_PERM_RWX>;

#address-cells = <1>;

#size-cells = <1>;

ranges = <0x0 0x2fc00000 0x10000>;

cpuppr_code_data: memory@0 {

reg = <0x0 DT_SIZE_K(62)>;

};

cpuapp_cpuppr_ipc_shm: memory@f800 {

reg = <0xf800 DT_SIZE_K(1)>;

};

cpuppr_cpuapp_ipc_shm: memory@fc00 {

reg = <0xfc00 DT_SIZE_K(1)>;

};

};

We’ll use the cpuapp_cpuppr_ipc_shm for cpuapp to cpuppr data transfer,

and cpuppr_cpuapp_ipc_shm for the other direction. The IPC config devicetree

include

file

is used by both the PPR and the application processor for the base IPC

configuration. The cpuapp_cpuppr_ipc node indicates compatibility with the

Inter-Core Messaging

(ICMsg)

IPC service backend.

cpuapp_cpuppr_ipc: ipc-2-13 {

compatible = "zephyr,ipc-icmsg";

status = "disabled";

dcache-alignment = <32>;

mboxes = <&cpuapp_bellboard 13>,

<&cpuppr_vevif 12>;

};

The application processor devicetree

file

configures the cpuapp to cpuppr shared memory for sending (tx) and the

cpuppr to cpuapp region for receiving (rx). It also assigns the rx name to

the first MBOX (cpuapp_bellboard) and tx to the second (cpuppr_vevif).

&cpuapp_cpuppr_ipc {

mbox-names = "rx", "tx";

tx-region = <&cpuapp_cpuppr_ipc_shm>;

rx-region = <&cpuppr_cpuapp_ipc_shm>;

};

The PPR devicetree file does the opposite.

&cpuapp_cpuppr_ipc {

mbox-names = "tx", "rx";

tx-region = <&cpuppr_cpuapp_ipc_shm>;

rx-region = <&cpuapp_cpuppr_ipc_shm>;

};

The cpuapp_bellboard is first defined in the common nRF54H20 devicetree

include file.

cpuapp_bellboard: mailbox@9a000 {

reg = <0x9a000 0x1000>;

status = "disabled";

power-domains = <&gpd NRF_GPD_FAST_ACTIVE0>;

#mbox-cells = <1>;

};

Then, for each processor, the relevant additional properties are added that

allow the same label (cpuapp_bellboard) to be used for both the sending and

receiving sides.

&cpuapp_bellboard {

compatible = "nordic,nrf-bellboard-rx";

};

&cpuapp_bellboard {

compatible = "nordic,nrf-bellboard-tx";

};

Even more properties are defined in the board specific devicetree file for the application processor.

The definition of the cpuppr_vevif MBOX differs slightly, as instead of a

single global_peripheral with consistent memory mapped registers, the PPR’s

sending VEVIF

interface

is defined as a subnode on the cpuppr_vpr global peripheral with memory mapped

registers, while the receiving VEVIF

interface

is defined as a subnode of the cpuppr itself.

cpuppr_vpr: vpr@908000 {

compatible = "nordic,nrf-vpr-coprocessor";

reg = <0x908000 0x1000>;

status = "disabled";

#address-cells = <1>;

#size-cells = <1>;

ranges = <0x0 0x908000 0x1000>;

power-domains = <&gpd NRF_GPD_SLOW_ACTIVE>;

cpuppr_vevif_tx: mailbox@0 {

compatible = "nordic,nrf-vevif-task-tx";

reg = <0x0 0x1000>;

status = "disabled";

#mbox-cells = <1>;

nordic,tasks = <16>;

nordic,tasks-mask = <0xfffffff0>;

};

};

cpuppr: cpu@d {

compatible = "nordic,vpr";

reg = <13>;

device_type = "cpu";

clocks = <&fll16m>;

clock-frequency = <DT_FREQ_M(16)>;

riscv,isa = "rv32emc";

nordic,bus-width = <32>;

cpuppr_vevif_rx: mailbox {

compatible = "nordic,nrf-vevif-task-rx";

status = "disabled";

interrupt-parent = <&cpuppr_clic>;

interrupts = <0 NRF_DEFAULT_IRQ_PRIORITY>,

<1 NRF_DEFAULT_IRQ_PRIORITY>,

<2 NRF_DEFAULT_IRQ_PRIORITY>,

<3 NRF_DEFAULT_IRQ_PRIORITY>,

<4 NRF_DEFAULT_IRQ_PRIORITY>,

<5 NRF_DEFAULT_IRQ_PRIORITY>,

<6 NRF_DEFAULT_IRQ_PRIORITY>,

<7 NRF_DEFAULT_IRQ_PRIORITY>,

<8 NRF_DEFAULT_IRQ_PRIORITY>,

<9 NRF_DEFAULT_IRQ_PRIORITY>,

<10 NRF_DEFAULT_IRQ_PRIORITY>,

<11 NRF_DEFAULT_IRQ_PRIORITY>,

<12 NRF_DEFAULT_IRQ_PRIORITY>,

<13 NRF_DEFAULT_IRQ_PRIORITY>,

<14 NRF_DEFAULT_IRQ_PRIORITY>,

<15 NRF_DEFAULT_IRQ_PRIORITY>;

#mbox-cells = <1>;

nordic,tasks = <16>;

nordic,tasks-mask = <0xfffffff0>;

};

};

The generic cpuppr_vevif label is then applied for each processor.

cpuppr_vevif: &cpuppr_vevif_tx {};

cpuppr_vevif: &cpuppr_vevif_rx {};

Behind the scenes, the Arm Cortex-M33 processors are using the Nested Vector

Interrupt Controller

(NVIC)

and the RISC-V processors are using the Core Local Interrupt Controller

(CLIC). We’ll cover them in

greater depth in a future post, but for now we can see that the

cpuppr_vevif_rx mailbox references the cpuppr_clic as its interrupt-parent

property above, while the cpuapp has a private node for its

NVIC,

and it is defined as the parent at the soc level for the application

processor.

interrupt-parentis an inherited property, so assigning the property on a node will also make it apply to all subnodes unless specified otherwise.

cpuapp_ppb: cpuapp-ppb-bus {

#address-cells = <1>;

#size-cells = <1>;

cpuapp_systick: timer@e000e010 {

compatible = "arm,armv8m-systick";

reg = <0xe000e010 0x10>;

status = "disabled";

};

cpuapp_nvic: interrupt-controller@e000e100 {

compatible = "arm,v8m-nvic";

reg = <0xe000e100 0xc00>;

arm,num-irq-priority-bits = <3>;

#interrupt-cells = <2>;

interrupt-controller;

#address-cells = <1>;

};

};

/ {

soc {

compatible = "simple-bus";

interrupt-parent = <&cpuapp_nvic>;

ranges;

stmesp: memory@a2000000 {

compatible = "arm,stmesp";

reg = <0xa2000000 0x1000000>;

};

};

};

With an understanding of the hardware configuration, we can use the Zephyr

icmsg

sample

to demonstrate communication between the application processor and the PPR. I

recently added support for the nRF54H20DK

board to this sample,

which effectively consisted of just enabling the previously discussed nodes via

devictree overlays. For the application processor, it was also necessary to

reassign the ipc0 label.

/ {

chosen {

/delete-property/ zephyr,bt-hci;

};

};

/delete-node/ &ipc0;

ipc0: &cpuapp_cpuppr_ipc {

status = "okay";

};

&cpuapp_bellboard {

status = "okay";

};

&cpuppr_vevif {

status = "okay";

};

For the PPR, hardware flow control needed to be disabled for console ouput over UART.

ipc0: &cpuapp_cpuppr_ipc {

status = "okay";

};

&cpuppr_vevif {

status = "okay";

};

&cpuapp_bellboard {

status = "okay";

};

&uart135 {

/delete-property/ hw-flow-control;

};

The sample is comprised of two similar but distinct applications. The

first

runs on the application processor and sends messages using the IPC service where

the first byte of the data cycles through capital alphabetical ASCII characters

(A-Z). It concurrently receives data from the second

application,

which runs on the PPR and sends data with first byte cycling through lowercase

alphabetical ASCII characters (a-z) while receiving from the application

processor. The callbacks provided by each application verify that the first byte

of the data matches the expected character.

Similar to how we observed the application core configure and boot the PPR VPR

processor in the last post, which will also take place in this sample, the MBOX

and IPC “devices” will also be setup during kernel boot on each processor

according to their compatible properties. For example, the PPR will configure

an interrupt service routine

(ISR)

for each interrupt associated with the vevif_task_rx device.

#if defined(CONFIG_GEN_SW_ISR_TABLE)

#define VEVIF_IRQ_CONNECT(idx, _) \

IRQ_CONNECT(DT_INST_IRQ_BY_IDX(0, idx, irq), DT_INST_IRQ_BY_IDX(0, idx, priority), \

vevif_task_rx_isr, &vevif_irqs[idx], 0)

#else

#define VEVIF_IRQ_FUN(idx, _) \

ISR_DIRECT_DECLARE(vevif_task_##idx##_rx_isr) \

{ \

vevif_task_rx_isr(&vevif_irqs[idx]); \

return 1; \

}

LISTIFY(DT_NUM_IRQS(DT_DRV_INST(0)), VEVIF_IRQ_FUN, ())

#define VEVIF_IRQ_CONNECT(idx, _) \

IRQ_DIRECT_CONNECT(DT_INST_IRQ_BY_IDX(0, idx, irq), DT_INST_IRQ_BY_IDX(0, idx, priority), \

vevif_task_##idx##_rx_isr, 0)

#endif

static int vevif_task_rx_init(const struct device *dev)

{

nrf_vpr_csr_vevif_tasks_clear(NRF_VPR_TASK_TRIGGER_ALL_MASK);

LISTIFY(DT_NUM_IRQS(DT_DRV_INST(0)), VEVIF_IRQ_CONNECT, (;));

return 0;

}

DEVICE_DT_INST_DEFINE(0, vevif_task_rx_init, NULL, NULL, NULL, POST_KERNEL,

CONFIG_MBOX_INIT_PRIORITY, &vevif_task_rx_driver_api);

Similarly, the ipc_icmsg IPC backend will

configure

the transmitting and receiving MBOX, as well as the corresponding shared memory

buffers for the cpuapp_cpuppr_ipc device.

#define DEFINE_BACKEND_DEVICE(i) \

static const struct icmsg_config_t backend_config_##i = { \

.mbox_tx = MBOX_DT_SPEC_INST_GET(i, tx), \

.mbox_rx = MBOX_DT_SPEC_INST_GET(i, rx), \

}; \

\

PBUF_DEFINE(tx_pb_##i, \

DT_REG_ADDR(DT_INST_PHANDLE(i, tx_region)), \

DT_REG_SIZE(DT_INST_PHANDLE(i, tx_region)), \

DT_INST_PROP_OR(i, dcache_alignment, 0)); \

PBUF_DEFINE(rx_pb_##i, \

DT_REG_ADDR(DT_INST_PHANDLE(i, rx_region)), \

DT_REG_SIZE(DT_INST_PHANDLE(i, rx_region)), \

DT_INST_PROP_OR(i, dcache_alignment, 0)); \

\

static struct icmsg_data_t backend_data_##i = { \

.tx_pb = &tx_pb_##i, \

.rx_pb = &rx_pb_##i, \

}; \

\

DEVICE_DT_INST_DEFINE(i, \

&backend_init, \

NULL, \

&backend_data_##i, \

&backend_config_##i, \

POST_KERNEL, \

CONFIG_IPC_SERVICE_REG_BACKEND_PRIORITY, \

&backend_ops);

DT_INST_FOREACH_STATUS_OKAY(DEFINE_BACKEND_DEVICE)

Upon finally reaching main, each application must access the IPC device via

the assigned ipc0 label, then register an endpoint that sets up the channel

over which to send and receive.

static struct ipc_ept_cfg ep_cfg = {

.cb = {

.bound = ep_bound,

.received = ep_recv,

},

};

const struct device *ipc0_instance;

struct ipc_ept ep;

int ret;

LOG_INF("IPC-service REMOTE demo started");

ipc0_instance = DEVICE_DT_GET(DT_NODELABEL(ipc0));

ret = ipc_service_open_instance(ipc0_instance);

if ((ret < 0) && (ret != -EALREADY)) {

LOG_ERR("ipc_service_open_instance() failure");

return ret;

}

ret = ipc_service_register_endpoint(ipc0_instance, &ep, &ep_cfg);

if (ret < 0) {

LOG_ERR("ipc_service_register_endpoint() failure");

return ret;

}

while (bound_sem != 0) {

};

For the ICMsg backend, ipc_service_register_endpoint ultimately calls

icmsg_open,

which executes a bonding process that consists of writing a magic number to

shared memory, then triggering an interrupt on the other processor.

int icmsg_open(const struct icmsg_config_t *conf,

struct icmsg_data_t *dev_data,

const struct ipc_service_cb *cb, void *ctx)

{

if (!atomic_cas(&dev_data->state, ICMSG_STATE_OFF, ICMSG_STATE_BUSY)) {

/* Already opened. */

return -EALREADY;

}

dev_data->cb = cb;

dev_data->ctx = ctx;

dev_data->cfg = conf;

#ifdef CONFIG_IPC_SERVICE_ICMSG_SHMEM_ACCESS_SYNC

k_mutex_init(&dev_data->tx_lock);

#endif

int ret = pbuf_tx_init(dev_data->tx_pb);

if (ret < 0) {

__ASSERT(false, "Incorrect Tx configuration");

return ret;

}

ret = pbuf_rx_init(dev_data->rx_pb);

if (ret < 0) {

__ASSERT(false, "Incorrect Rx configuration");

return ret;

}

ret = pbuf_write(dev_data->tx_pb, magic, sizeof(magic));

if (ret < 0) {

__ASSERT_NO_MSG(false);

return ret;

}

if (ret < (int)sizeof(magic)) {

__ASSERT_NO_MSG(ret == sizeof(magic));

return ret;

}

ret = mbox_init(conf, dev_data);

if (ret) {

return ret;

}

#ifdef CONFIG_MULTITHREADING

ret = k_work_schedule_for_queue(workq, &dev_data->notify_work, K_NO_WAIT);

if (ret < 0) {

return ret;

}

#else

notify_process(dev_data);

#endif

return 0;

}

To see this in action, we can build and flash the applications on the nRF54H20DK.

west build -p -b nrf54h20dk/nrf54h20/cpuapp -T sample.ipc.icmsg.nrf54h20 .

west flash

The application processor (HOST) console output can be viewed on /dev/ttyACM0,

while the PPR (REMOTE) will be on /dev/ttyACM1.

[00:00:00.161,970] <inf> host: IPC-service HOST demo started

[00:00:00.162,028] <inf> host: Ep bounded

[00:00:00.162,049] <inf> host: Perform sends for 1000 [ms]

[00:00:01.162,077] <inf> host: Sent 664770 [Bytes] over 1000 [ms]

[00:00:01.162,083] <inf> host: Wait 500ms. Let remote core finish its sends

[00:00:01.662,141] <inf> host: Received 17941 [Bytes] in total

[00:00:01.662,155] <inf> host: IPC-service HOST demo ended

[00:00:00.138,843] <inf> remote: IPC-service REMOTE demo started

[00:00:00.162,313] <inf> remote: Ep bounded

[00:00:01.163,063] <inf> remote: Perform sends for 1000 [ms]

[00:00:02.163,186] <inf> remote: Sent 36558 [Bytes] over 1000 [ms]

[00:00:02.163,285] <inf> remote: Received 664770 [Bytes] in total

[00:00:02.163,312] <inf> remote: IPC-service REMOTE demo ended

We can also follow Nordic’s debugging documentation to step through instructions on the application processor. The on-board J-Link debug probe allows us to use the J-Link GDB Server with the GDB included in the Zephyr toolchain. To target the nRF54H20DK, the following arguments should be provided.

JLinkGDBServer -select USB=0 -device Cortex-M33 -if SWD -speed auto -port 2331

The application processor will be selected as the debug target by default.

west will automatically start GDB and connect to the GDB server with

the attach command.

west attach

We can set a breakpoint on main, then continue until execution reaches it.

(gdb) monitor reset

Resetting target

(gdb) b main

Breakpoint 1 at 0xe0a6b44: file icmsg/build/icmsg/zephyr/include/generated/zephyr/syscalls/log_msg.h, line 37.

(gdb) c

Continuing.

Breakpoint 1, main () at icmsg/build/icmsg/zephyr/include/generated/zephyr/syscalls/log_msg.h:37

37 compiler_barrier();

If we view the PPR console output on /dev/ttyACM1 at this point, we can see

that the processor booted and reached its own main, but has not yet started

communicating with the application processor. This makes sense as the

application processor configures and starts the PPR, but the PPR cannot start

communicating with the application processor until both have established the IPC

service connection.

[00:00:09.651,883] <inf> remote: IPC-service REMOTE demo started

As previously mentioned, establishing the ICMsg endpoint requires each processor writing a magic number into the shared memory buffer used for receiving by the other processor.

static const uint8_t magic[] = {0x45, 0x6d, 0x31, 0x6c, 0x31, 0x4b,

0x30, 0x72, 0x6e, 0x33, 0x6c, 0x69, 0x34};

If we inspect the memory at cpuppr_cpuapp_ipc_shm, we can see the magic number

present at (0x2fc0fc28).

(gdb) x/100bx 0x2fc0fc00

0x2fc0fc00: 0x00 0x00 0x00 0x00 0x9d 0x57 0xe4 0x10

0x2fc0fc08: 0x05 0x63 0x79 0x42 0x74 0x70 0xb5 0xf3

0x2fc0fc10: 0x03 0x29 0x4e 0x89 0xfd 0xbc 0xc1 0xe0

0x2fc0fc18: 0x90 0xe6 0x19 0xab 0xc3 0xd9 0xdf 0x4e

0x2fc0fc20: 0x14 0x00 0x00 0x00 0x00 0x0d 0x00 0x00

0x2fc0fc28: 0x45 0x6d 0x31 0x6c 0x31 0x4b 0x30 0x72

0x2fc0fc30: 0x6e 0x33 0x6c 0x69 0x34 0x2b 0x00 0x00

0x2fc0fc38: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0fc40: 0x53 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0fc48: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0fc50: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0fc58: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0fc60: 0x00 0x00 0x00 0x00

To observe the establishment of the endpoint on the application processor side, we can step through by placing breakpoints at the corresponding function calls.

(gdb) b ipc_service_register_endpoint

Breakpoint 2 at 0xe0a82f4: file zephyr/subsys/ipc/ipc_service/ipc_service.c, line 71.

(gdb) b register_ept

Breakpoint 3 at 0xe0b0e4e: file zephyr/subsys/ipc/ipc_service/backends/ipc_icmsg.c, line 20.

(gdb) b icmsg_open

Breakpoint 4 at 0xe0a8528: file zephyr/include/zephyr/sys/atomic_builtin.h, line 26.

(gdb) b mbox_init

Breakpoint 5 at 0xe0a85bc: file zephyr/subsys/ipc/ipc_service/lib/icmsg.c, line 244.

After the call to mbox_init, we can now see the magic number in the

cpuapp_cpuppr_ipc_shm shared memory buffer as well (0x2fc0f828).

(gdb) c

Continuing.

Breakpoint 2, ipc_service_register_endpoint (instance=0xe0b1d10 <__device_dts_ord_62>, ept=0x2f013d3c <z_main_stack+804>, cfg=0x2f0110e0 <ep_cfg>) at zephyr/subsys/ipc/ipc_service/ipc_service.c:71

71 if (!instance || !ept || !cfg) {

(gdb) c

Continuing.

Breakpoint 3, register_ept (instance=0xe0b1d10 <__device_dts_ord_62>, token=0x2f013d40 <z_main_stack+808>, cfg=0x2f0110e0 <ep_cfg>) at zephyr/subsys/ipc/ipc_service/backends/ipc_icmsg.c:20

20 const struct icmsg_config_t *conf = instance->config;

(gdb) c

Continuing.

Breakpoint 4, icmsg_open (conf=0xe0b2d00 <backend_config_1>, dev_data=0x2f011000 <backend_data_1>, cb=0x2f0110e8 <ep_cfg+8>, ctx=0x0) at zephyr/include/zephyr/sys/atomic_builtin.h:26

26 return __atomic_compare_exchange_n(target, &old_value, new_value,

(gdb) c

Continuing.

Breakpoint 5, mbox_init (dev_data=0x2f011000 <backend_data_1>, conf=0xe0b2d00 <backend_config_1>) at zephyr/subsys/ipc/ipc_service/lib/icmsg.c:244

244 k_work_init(&dev_data->mbox_work, mbox_callback_process);

(gdb) n

245 k_work_init_delayable(&dev_data->notify_work, notify_process);

(gdb) x/100bx 0x2fc0f800

0x2fc0f800: 0x00 0x00 0x00 0x00 0xeb 0x6b 0x58 0x78

0x2fc0f808: 0xad 0x48 0x70 0xdd 0x6d 0x9d 0xc6 0x0f

0x2fc0f810: 0xe9 0x9a 0x62 0xd5 0xd1 0x8d 0x6b 0xe8

0x2fc0f818: 0x44 0x65 0xb0 0x6a 0x60 0x01 0x4f 0x38

0x2fc0f820: 0x14 0x00 0x00 0x00 0x00 0x0d 0x00 0x00

0x2fc0f828: 0x45 0x6d 0x31 0x6c 0x31 0x4b 0x30 0x72

0x2fc0f830: 0x6e 0x33 0x6c 0x69 0x34 0x00 0x00 0x00

0x2fc0f838: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0f840: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0f848: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0f850: 0x00 0x00 0x00 0x00 0x00 0x31 0x00 0x00

0x2fc0f858: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x2fc0f860: 0x6a 0x00 0x00 0x00

With the endpoints bound, continuing will result in the sample running as previously observed.

[00:00:09.651,883] <inf> remote: IPC-service REMOTE demo started

[00:24:00.195,396] <inf> remote: Ep bounded

[00:24:01.196,088] <inf> remote: Perform sends for 1000 [ms]

[00:24:02.196,186] <inf> remote: Sent 36558 [Bytes] over 1000 [ms]

[00:24:02.196,285] <inf> remote: Received 664770 [Bytes] in total

[00:24:02.196,312] <inf> remote: IPC-service REMOTE demo ended